Equalization is probably the most ubiquitous process we apply to tracks. It’s a powerful tool that we can use to shape sounds and correct systemic errors. Modern Equalization is a wonder to behold. The number of filter types, emulations, hardware options, extremely precise control, and a large number of bands can easily make us overdo EQ.

When I first started mastering, I was just out of university and thinking about sound in terms of physics. Naturally, that led me to investigate how different types of audio processing work and what artifacts these processes produce. A general rule that is pretty intuitive is: the more extreme the processing, the more damage it does to the audio.

So when I started seeing “Surgical EQ” pop up everywhere on the internet, referenced in mixing and mastering circles as if it were a standard technique, applied often enough to be listed in step-by-step guides on audio engineering…my eye started to twitch a bit.

As I said before, the more extreme the processing, the more destructive it is. Surgical EQ, by definition, uses very narrow Q filters to “notch” out specific frequencies. That narrow Q is extreme. When it comes to EQ, the general rule is the wider the Q, the more natural and pleasant the result. So if that’s the case, why are we using Surgical EQ in the first place?

That’s a good question. It’s THE question. Why use it? Why not use it?

Before diving into those questions, I think we need to establish some basic principles.

Principle 1: Localisation of Sound Relies on Phase

Our ears are pretty amazing. Have you considered how we can hear in three dimensions with two ears? How can we position sound above, below, ahead, or behind us with only two ears? The secret is in the pinnae of our ears and the decoding of our brain. When it comes to left and right, we position the sound by the way we hear the sound at slightly different times in each ear, known as the Haas effect, and this is primarily a function of phase (the timing of the sound wave) as it enters each ear. The slightly later phase of the sound entering the right ear tells your brain that the sound is to the left.

Insert diagram of Haas Effect

Another way our ears localise sound is by how our outer ear, called the Pinna, shapes the frequency response. Yes, your ear is an EQ in a sense! It will shape the sound in a way that tells our brain where the sound is coming from. This relies on referencing internalised sounds stored in our memory and compares them, discovering where the sound is coming from in terms of up and down, forward and backward. (Since air also has a frequency-dependent, it helps us gauge the distance of sound, but that’s for another time). Isn’t that cool? That’s enough for now, let’s talk about another principle.

Principle 2: Natural Sounds Have Natural Harmonic Sequences

One of the terms we use to discuss audio is timbre. Timbre refers to a combination of its dynamic and harmonic structure. We usually use a word to describe the overall impression, such as sweet, rich, gravelly, hard, brash, etc. Sounds that occur in nature consist of a series of harmonics. We refer to the lowest one as the fundamental harmonic, and it tells our brain which note we are hearing. The the other harmonics are also notes that are ringing with the fundamental note according to the laws of physics in the material making the sound. Musical sounds mostly consist of harmonics that are multiples of the fundamental harmonic.

Insert image of SPAN viewing a sound, pointing out Fundamental, Harmonics, and the Harmonic Sequence.

Because these harmonics are governed by natural physical laws in nature, and our brain is constantly referencing sounds to our mental database of sounds (as referred to in Principle 1), a sound that is consistent with its typical harmonic sequence sounds natural to our ears and is easy to identify and position.

Sounds that are generated artificially, whether electronically or digitally, sound musical to us because their harmonic sequence sounds natural. When we deviate from that, we end up with synthesised sounds that are unnatural sounding. This is neither a good nor bad thing when doing creative or experimental sound design, but we should be aware that a sound presenting as natural or unnatural is a consequence of its harmonic sequence (among other things). This can help our art as much as it can hinder it.

Consequences of a Sharp Phase Shift

If you are an audio engineer who uses Surgical EQ on a regular basis, you may be starting to feel nervous about the direction of this article. But I’m not here to wholesale dismiss Surgical EQ as a useful technique. What I am here to do, though, is ensure that you understand the cost that comes with it.

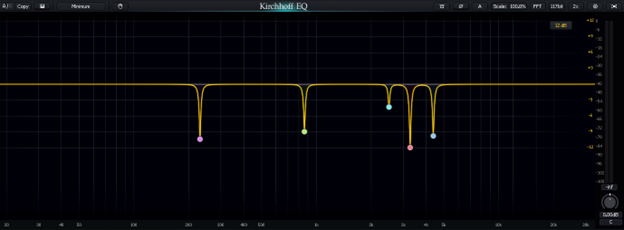

Let’s take a look at the phase response of a few EQ’s doing surgical moves around 1 kHz. I’ll use the EQ’s tightest Q and -12 dB of gain on the band, with the exception of bx_digital V3, which goes into NOTCH mode.

Notice that the sharper the Q, the steeper the phase distortion, as well as the deeper the cut, the larger the phase distortion.

Now, consider what this processing does to the sound in terms of our spatial localisation of the sound.

The phase shift that you see means that in that region, our brain how hears the area just below 1 kHz as happening sooner and the area just above 1 kHz as happening later. How will this affect the localisation of the sound? Let’s do some case studies.

For a sound panned to about 10:00 to the left what would happen is that most of the sound would appear at 10:00, as intended. However, 900 Hz would sound further left (negative phase means the sound arrives sooner), and 1.1 kHz would sound further right (positive phase means the sound arrives later). This means that we have smeared the sound from left to right, making it hard to pinpoint where the sound is coming from.

For a sound that is panned centre the different timing of 900 Hz and 1.1 kHz means that instead of smearing it from left to right, we have smeared it in terms of its distance from us. 900 Hz will arrive first (before even the fundamental!), implying it is closer than the rest of the sound, and 1.1 kHz will arrive later, sounding further away from the rest of the sound. This will also be happening to some extent in the first example as well.

At this point you might wonder why all EQ doesn’t do this to the signal. Well, it actually does. However, our brain can interpret the more gradual phase shift of a wider Q as the object making the sound simply larger sounding. This is because the shift in perceived location is gradual rather than sudden.

Sharp phase shifts make sounds difficult to localise, blurring the imaging and making the soundstage feel confusing.

Consequences for Disrupting the Harmonic Sequence

The second problem we introduced with notching certain frequencies is that we effectively delete a harmonic from the sequence. This is doubly problematic since it not only disrupts the natural sequence, but it also disrupts a different harmonic of the sequence depending on the note being played! Check out this notch affecting the 3rd and 2nd harmonic of the note respectively:

What does this mean? It means that as the note changes, we are changing the timbre of the instrument. Every note will have a different timbre. This adds an unnecessarily complicated amount of mental processing to understand the sound since each note appears as essentially a different instrument to our brain at first.

But that isn’t all. When the harmonic sequence is disrupted, it also can create the impression that the upper harmonics of the sound are “disconnected” from the lower harmonics. This creates the impression that the single instrument is, in fact, coming from two different sources. Sometimes this is used specifically to stylise the sound, but it should be a deliberate decision.

What does it mean?

The really tricky thing about these consequences is that individual tracks with a single notch on them might not sound all that different on their own; in fact they might sound better at first! However, when this is done on several elements of the mix, and in particular if it is done on a critical, familiar element, such as vocals, it can create a presentation that feels cluttered, nebulous, and confusing. Nothing feels wrong at first listen, but gradually as we sit through more of the music, our brain starts to feel…annoyed, fatigued, cramped.

This insidious nature of phase and tonal distortion can ruin a mix and you can be very hard to tell why. Most likely, it will just feel puzzling why it doesn’t sound as clear and open as you think it should.

So when should we use Surgical EQ?

The main case for Surgical EQ is when the acoustic environment or build of the instrument has created a resonance. Resonance is where a narrow band of frequencies is emphasised significantly over the other frequencies in a static way. You can see it on a spectrum analyser easily, but can also be confused with a natural sound fairly easily. Luckily, resonance has a particular kind of sound. Rather than explain in words, let me tell you how to hear it for yourself.

To become familiar with resonance, take the idea of a notch band on an EQ and apply it to a very narrow boost. What you hear is resonance. Learn to identify this sound. This is when you will want to use a notch filter to correct the systemic issue at that frequency.

Since the resonance is usually at a fixed frequency, you do not run into the harmonic sequence consequences mentioned before provided the cut is the right depth, but the phase distortion consequences will still remain. So this means you gain a proper harmonic balance, and a resonance-free timbre, but perhaps at the expense of the imaging and soundstage. Often this is easily the lesser of two evils.

The Main Takeaways

The two ideas that I hope you gain from this article are:

Surgical EQ always comes with consequences. It can make the imaging of your mix elements “blurred” in the soundstage; instruments will feel smeared and difficult to locate precisely. It can also make sounds feel unnatural as the timbre shifts with different notes.

The second idea is very important. Whenever you run into a time that you need Surgical EQ, you should note it and try to track down the cause. Surgical EQ is something you use to rescue poorly recorded source audio. Find the source of the problem and fix it, so you do not need to use Surgical EQ in the first place. This is how we become better at what we do. Happy mixing!